July 2, 2014

This is 2014 backwards: OSX blit performance

I've been investigating, once again, the performance of drawing code-rendered RGBA bitmaps to NSViews in OSX. I found that on my Retina Macbook Pro (when the application was not in low-resolution legacy mode), calling CGContextSetInterpolationQuality with kCGInterpolationNone would cause CGContextDrawImage() to be more than twice as fast (with less filtering of the image, which was a fair tradeoff and often desired).

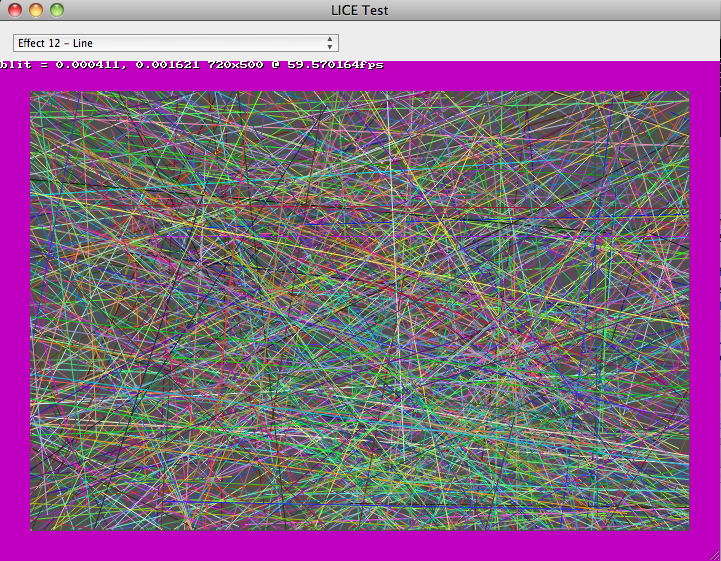

The above performance gain aside, I am still not satisfied with the bitmap drawing performance on recent OSX versions, which has led me to benchmark SWELL's blitting code. My test uses the LICE test application, with a screen full of lines, an opaque NSView, and 720x500 resolution.

OSX 10.6 vs 10.8 on a C2D iMac

My (C2D 2.93GHz) iMac running 10.6 easily runs the benchmark at close to 60 FPS, using about 45% of one core, with the BitBlt() call typically taking 1ms for each frame.

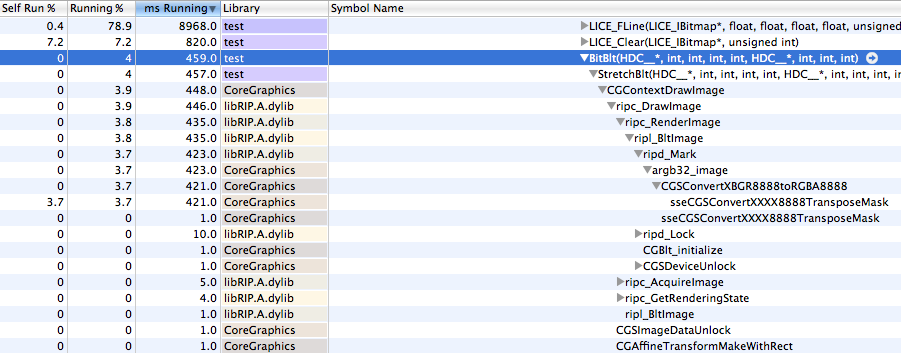

Here is a profile -- note that CGContextDrawImage() accounts for a modest 3.9% of the total CPU use:

It might be possible to reduce the work required by changing our bitmap representation from ABGR to RGBA (avoiding sseCGSConvertXXXX8888TransposeMask and performing a memcpy() instead), but in my opinion 1ms for a good sized blit (and less than 4% of total CPU time for this demo) is totally acceptable.

I then rebooted the C2D iMac into OSX 10.8 (Mountain Lion) for a similar test.

Running the same benchmark on the same hardware in Mountain Lion, we see that each call to BitBlt() takes over 6ms, the application struggles to exceed 57 FPS, and the CPU usage is much higher, at about 73% of a core.

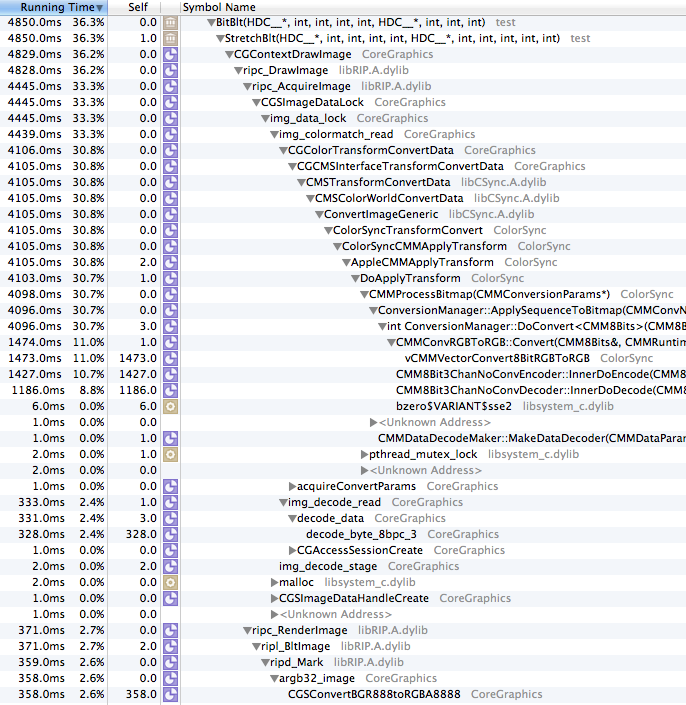

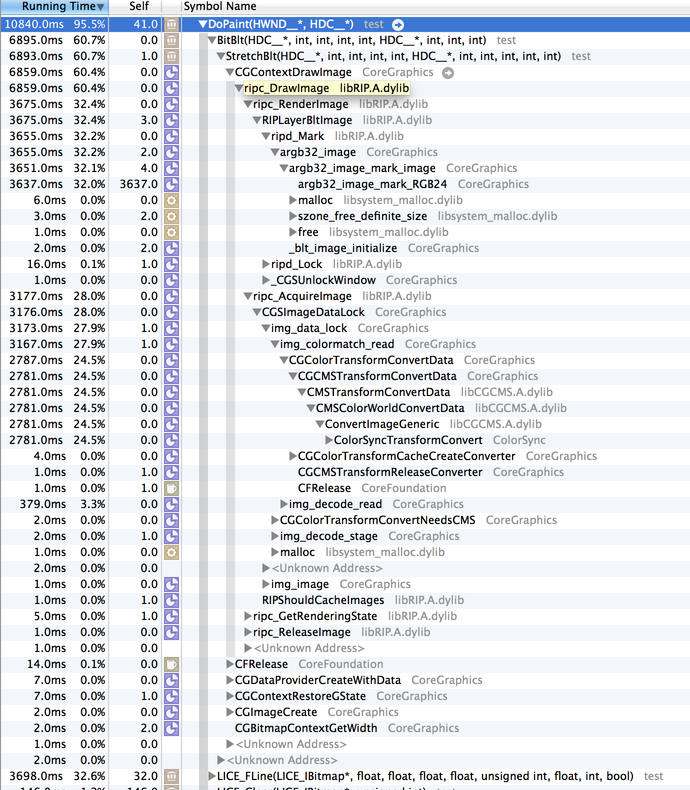

Here is the time sampling of the CGContextDrawImage() -- in this case it accounts for 36% of the total CPU use!

Looking at the difference between these functions, it is obvious where most of the additional processing takes place -- within img_colormatch_read and CGColorTransformConvertData, where it apparently applies color matching transformations.

I'm happy that Apple cares about color matching, but to force it on (without allowing developers control over it) is wasteful. I'd much rather have the ability transform the colors before rendering, and be able to quickly blit to screen, than to have to have every single pixel pushed to the screen color transformed. There may be some magical way to pass the right colorspace value to CGCreateImage() to bypass this, but I have not found it yet (and I have spent a great deal of time looking, and trying things like querying the monitor's colorspace).

That's what OpenGL is for!

But wait, you say -- the preferred way to quickly draw to screen is OpenGL.

Updating a complex project to use OpenGL would be a lot of work, but for this test project I did implement a very naive OpenGL blit, which enabled an OpenGL context for the view and created a texture for drawing each frame, more or less like:

glDisable(GL_TEXTURE_2D);

glEnable(GL_TEXTURE_RECTANGLE_EXT);

GLuint texid=0;

glGenTextures(1, &texid);

glBindTexture(GL_TEXTURE_RECTANGLE_EXT, texid);

glPixelStorei(GL_UNPACK_ROW_LENGTH, sw);

glTexParameteri(GL_TEXTURE_RECTANGLE_EXT, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexImage2D(GL_TEXTURE_RECTANGLE_EXT,0,GL_RGBA8,w,h,0,GL_BGRA,GL_UNSIGNED_INT_8_8_8_8, p);

glBegin(GL_QUADS);

glTexCoord2f(0.0f, 0.0f);

glVertex2f(-1,1);

glTexCoord2f(0.0f, h);

glVertex2f(-1,-1);

glTexCoord2f(w,h);

glVertex2f(1,-1);

glTexCoord2f(w, 0.0f);

glVertex2f(1,1);

glEnd();

glDeleteTextures(1,&texid);

glFlush();

This resulted in better performance on OSX 10.8, each BitBlt() taking about 3ms, framerate increasing to 58, and the CPU use going down to about 50% of a core. It's an improvement over CoreGraphics, but still not as fast as CoreGraphics on 10.6.

The memory use when using OpenGL blitting increased by about 10MB, which may not sound like much, but if you are drawing to many views, the RAM use would potentially increase with each view.

I also tested the OpenGL implementation on 10.6, but it was significantly slower than CoreGraphics: 3ms per frame, nearly 60 FPS but CPU use was 60% of a core, so if you do ever implement OpenGL blitting, you will probably want to disable it for 10.6 and earlier.

Core 2 Duo?! That's ancient, get a new computer!

After testing on the C2D, I moved back to my modern quad-core i7 Retina Macbook Pro running 10.9 (Mavericks) and did some similar tests.

- Normal: 12-14ms per frame, 46 FPS, 70% of a core CPU use

- Normal, in "Low Resolution" mode: 6-7ms per frame, 58FPS, 60% of a core CPU use

- Normal, without the kCGInterpolationNone: 29ms per frame, 29 FPS, 70% of a core CPU use

- Normal, in "Low Resolution" mode, without kCGInterpolationNone: same as with kCGInterpolationNone.

- GL: 1-2ms per frame, 57 FPS, 37% of a core CPU

- GL, in "Low Resolution" mode: 1-2ms per frame, 57 FPS, 40% of a core CPU

Interestingly, "Low Resolution" mode is faster in all modes except for GL, where apparently it is slower (I'm guessing because the hardware accelerates the GL scaling, whereas "Low Resolution" mode puts it through a software-scaler at the end.

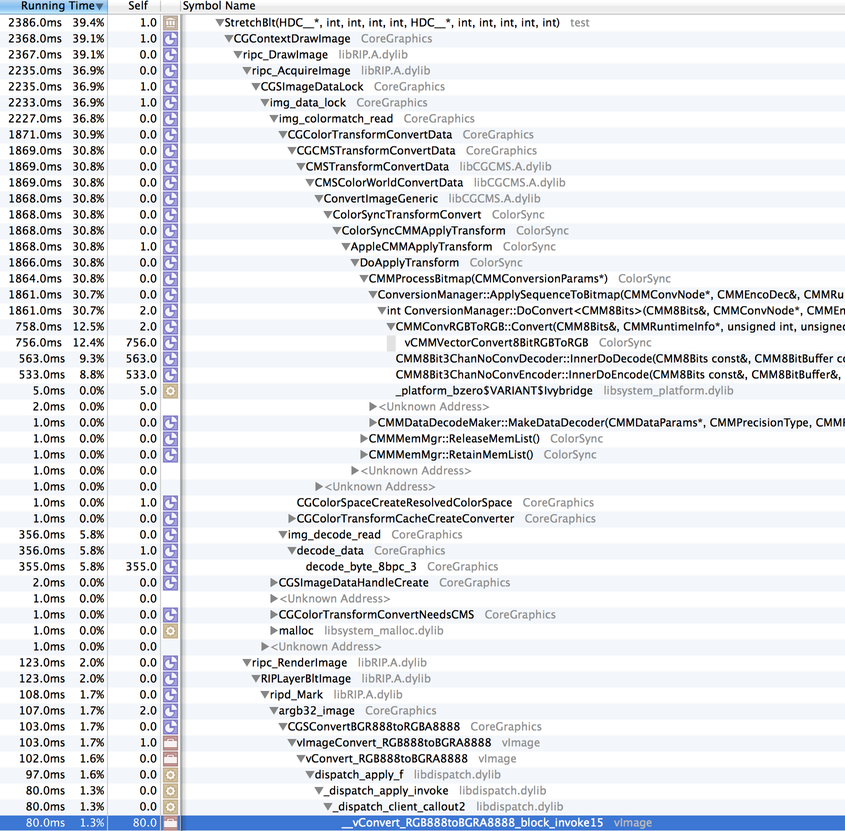

Let's see where the time is spent in the "Normal, Low Resolution" mode:

This looks very similar to the 10.8, non-retina rendering, though some function names have changed. There is the familiar img_colormatch_read/CGColorTransformConvertData call which is eating a good chunk of CPU. The ripc_RenderImage/ripd_Mark/argb32_image stack is similar to 10.8, and reasonable in CPU cycles consumed.

Looking at the Low Resolution mode, it really does behave similar to that of 10.8 (though it's depressing to see that it still takes as long to run on an i7 as 10.8 did on a C2D, hmm). Let's look at the full-resolution Retina mode:

img_colormatch_read is present once again, but what's new is that ripc_RenderImage/ripd_Mark/argb32_image have a new implementation, calling argb32_image_mark_RGB24 -- and argb32_image_mark_RGB24 is a beast! It uses more CPU than just about anything else. What is going on there?

Conclusions

If you ever feel as if modern OSX versions have gotten slower when it comes to updating the screen, you would be right. The basic method of drawing ixels rendered in a platform-independent fashion to screen has gotten significantly slower since Snow Leopard, most likely in the name of color-accuracy. In my opinion this is an oversight on Apple's part, and they should extend the CoreGraphics APIs to allow manual application of color correction.

Additionally, I'm suspicious that something odd is going on within the function argb32_image_mark_RGB24, which appears to only be used on Retina displays, and that the performance of that function should be evaluated. Improving the efficiency of that function would have a positive impact on the performance of many third party applications (including REAPER).

If anybody has an interest in duplicating these results or doing further testing, I have pushed the updates to the LICE test application to our WDL git repository (see WDL/lice/test/).

Update: July 3, 2014

After some more work, I've managed to get the CPU use down to a respectable level in non-Retina mode (10.8 on the iMac, 10.9/Low Resolution on the Retina MBP), by using the system monitor's colorspace:

CMProfileRef systemMonitorProfile = NULL;

CMError getProfileErr = CMGetSystemProfile(&systemMonitorProfile);

if(noErr == getProfileErr)

{

cs = CGColorSpaceCreateWithPlatformColorSpace(systemMonitorProfile);

CMCloseProfile(systemMonitorProfile);

}

Using this colorspace with CGContextCreateImage prevents CGContextDrawImage from calling img_colormatch_read/CGColorTransformConvertData/etc. On the C2D 10.8, it gets it down to 1-2ms per frame, which is reasonable.

However, this mode is appears to be slower on the Retina MBP in high resolution mode, as it calls argb32_image_mark_RGB32 instead of argb32_image_mark_RGB24 (presumably operating on my buffer directly rather than the intermediate colorspace-converted buffer), which is even slower.

Update: July 3, 2014, later

OK, if you provide a bitmap that is twice the size of the drawing rect, you can avoid argb32_image_mark_RGBXX, and get the Retina display to update in about 5-7ms, which is a good improvement (but by no means impressive, given how powerful this machine is). I made a very simple software scaler (that turns each pixel into 4), and it uses very little CPU. So this is acceptable as a workaround (though Apple should really optimize their implementation). We're at least around 6ms, which is way better than 12-14ms (or 29ms which is where we were last week!), but there's no reason this can't be faster. Update (2017): the mentioned method was only "faster" because it triggered multiprocessing, see this new post for more information.

As a nice side effect, I'm adding SWELL_IsRetinaDC(), so we can start making some things Retina aware -- JSFX GUIs would be a good place to start...

5 Comments